/Talking About Machines/: copier repair technicians and story-telling

Download MP3Welcome to oddly influenced, a podcast about how people have applied ideas from *outside* software *to* software. Episode 20: /Talking About Machines/: copier technicians and story-telling.

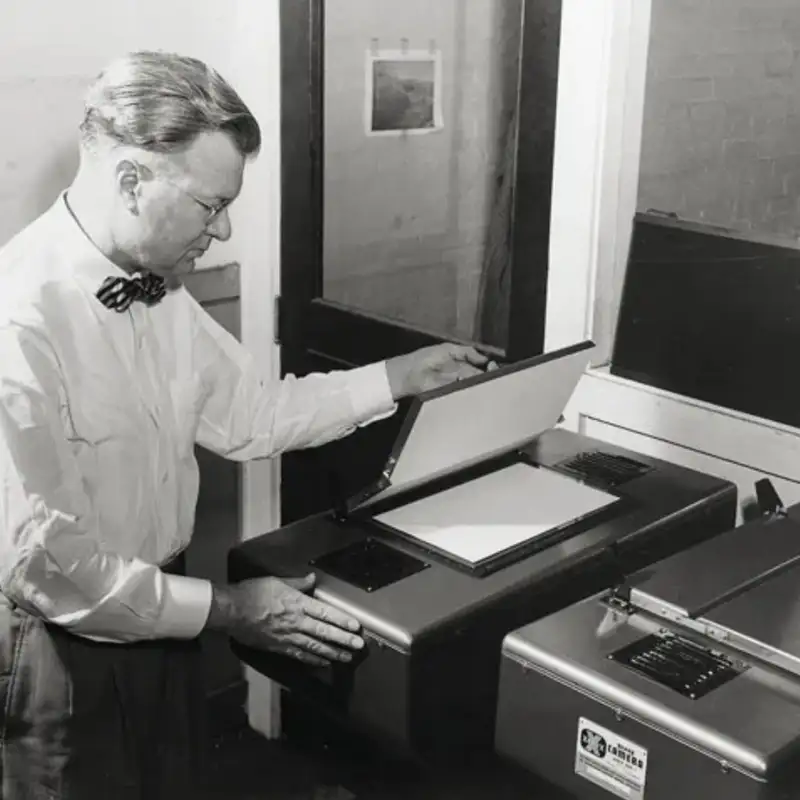

Sometime around 1990, Julian Orr went and hung out with Xerox field service technicians (the people who repair photocopiers). He was acting as an anthropologist, doing what they call an “ethnography”. In 1996, he published a book titled /Talking About Machines: An Ethnography of a Modern Job/. This episode is about that book.

Before I start the introduction, a note: part of what I want with all the episodes of this podcast is to provide examples and pithy quotes you can deploy when discussing how to do work. So I should point out that every episode has a script, and all the interviews have transcripts. You can find them in each episode’s show notes.

And I apologize for the delay between episodes. I really do hope we’re done with family emergencies for a while.

I also apologize for the length of this episode. I find how different jobs work endlessly fascinating, and I indulged in that here. Also, since one of Orr’s key points is the importance of war stories, I tell a couple of mildly long ones myself.

If you, like me, are suspicious of academics studying work they themselves don’t understand, you’ll be happy to know that Orr was a technician in the Armed Services before he went to college, earned money as a technician throughout college, and took the Xerox course on the main copier the technicians he was studying worked on.

Orr starts his book with a series of vignettes of life as a technician. I don’t have time for those, but I’ll describe what a day in the life of a field service tech looks like. I’m going to call the technician Todd because Todd was the Digital Equipment Corporation tech that I met often when I was a computer operator on a DecSystem-10 computer system for three years starting in 1978. Our Todd, though, will repair photocopiers.

Todd works in Silicon Valley. Silicon Valley’s dominant species is the automobile. Todd is responsible for a set of companies that are roughly clustered together (to minimize his time driving from problem to problem). Let’s pretend he’s just finished a service call.

Given the time, it’s possible he had a cell phone, but he might have had to call central dispatch on a pay phone. He’d call and ask the operator if he had any “call-outs”. What that means is that all Xerox customers in an area had the same number to call when they had problems with a copier. The central dispatch operator would take down the information so it would be ready when a tech needed something to do. If there was work for Todd, he’d take one of the call-outs. If he had nothing to do, he’d ask if any of the other techs in his team had pending work, and he might take one of those.

He’d drive to the customer site, and (most usually) pass through some mild security. He’d go to where the unhappy copier was. There, he’d meet someone who’d describe the problem. With luck, the copier would be expensive enough that it had dedicated operators. The alternative would be that it was a copier any random person could use to make copies. Those are worse for technicians because they’re more likely to be abused and random users are much worse at explaining problems than the average operator is.

Starting from this interview, Todd would begin diagnosing the problem, which is the hardest part of copier problem-solving. He’s *supposed* to rely on Xerox service documentation that will guide him through the whole process of diagnosis and repair. He’s to look up the symptom in (what was still probably, given the day) a loose-leaf binder. The instructions would tell him which tests to perform. The results would be organized as a decision tree: “Does the error log contain error E053? If yes, go to 10, If no go to 20” – that sort of thing.

If you listened to the previous three episodes, this is a prime example of Xerox “Seeing Like a State”, and it worked about as well as Scott has primed you to expect. Lots of the real problems weren’t in the documentation. Some of the instructions were wrong. And so on.

So Todd would use the service documentation, sure, but also the schematics that Xerox still supplied the techs (under protest), past experience, lore he’d learned from fellow techs, hints from what the user had said, and anything else he could, to come up with a diagnosis. Copiers were very complex, and diagnosis was sometimes easy but sometimes very hard.

If Todd couldn’t figure out the problem, he might bring it up in the restaurant where his team ate lunch. He’d ask for advice. I imagine that a lot of the advice was straightforward: “look for *this* in the error log”. But a big chunk came in the form of “war stories”: “I remember this one time…” - I bet you, my listener, have heard similar stories. Maybe they bored you. I’m going to suggest they shouldn’t have.

Alternately, Todd might ask a fellow team member to come help. Interestingly, the help often came in the form of *yet more war stories*these told standing over the copier. War stories are key to Orr’s account.

Technician Todd cares about two things: fixing the machines and presenting a good image. The reason for the latter is that, back then, copiers were usually leased to companies rather than bought. The leases were easy to break: a customer could just decide to tell Xerox to come and take back their crappy copier. Techs didn’t want to be mentioned as part of the reason the customer was upset.

The interaction between “presenting a good image” and “doing good work” is one of the interesting parts of Orr’s book.

But let’s get back to the podcast. For the rest of the episode, I want to describe what in Orr’s book resonated with me when it comes to debugging, testing, programming, managing-your-manager and all the other ways I got a flash of “he’s talking about copiers but I recognize that dynamic.”

A warning. The most *obvious* parallel is to the sort of operations work that involves dealing with problems in complicated networks of servers. Unfortunately, I know practically nothing about ops, except that twitter says they’re all furries and dye their hair bright, unnatural colors. (Not that I trust twitter.) I’d love to interview someone who knows ops well and can make some compare-and-contrast-style comments. Until then, I’ll speak to what I at least sort of know.

The first interesting comparison is that copier techs are, in the terminology of the last three episodes, *illegible*. Can the managers of techs determine if they’re doing a good job? No, they cannot. Only another tech can really judge a tech’s work, and techs very rarely become managers at the level that does performance reviews or salary bumps. So: Tech A is faster at routine maintenance than tech B – is that because tech A is more skilled or more sloppy?

Or: A tech had to come back to the customer because the fix didn’t actually seem to fix the problem. Did the tech diagnosis it wrong? Diagnose it right but botch the replacement of a broken part? Do the replacement correctly but the part came pre-broken from the factory? It’s impossible for a detached judge to know.

In classic High Modernism style, judgment of techs was offloaded to a subjective opinion made to appear objective. Xerox had a contact in each customer site, which they called the account manager. To judge a tech, they sent a survey to the account manager.

This has all sorts of problems, beginning with how the account manager actually has little contact with the day-to-day operation of any copier, and including that their job is balancing the needs of their organization with the cost of the copiers, *not* understanding how copiers – or copier techs – work.

It’s interesting how the techs reacted to where authority lay. They reacted by *making visible* their virtues.

The most obvious way was that Todd wore a dress shirt and tie. Orr isn’t specific but I remember those days. If Todd wore a suit coat to the site, he’d take it off first thing. That particular style of dress was a signifier that you weren’t an executive but you still weren’t low-status, like secretaries or copier operators. You were situated in that awkward middling class of white collar workers who exercise some independent judgment. I’m sure suit and tie (or the female equivalent) was the Xerox dress code for techs, but – at least according to Orr – the techs actively used it to show themselves as what they called “professional”. That is, someone who *thinks* rather than just follows flow charts like the ones in the service documentation.

Technicians had a dirty job compared to the average white collar worker. After all, copiers are mechanical devices with surfaces that need lubrication, they accumulate chads in their innards, and they *exist* to spray what’s effectively dirt onto paper. But the technicians were careful to keep the environment and themselves clean as they worked. They placed great store in an orderly work environment, with each tool or part in its place. Although a diagnosis job necessarily has its share of backtracking and false starts, they took care to proceed systematically, and a good technician was always ready to explain the “why” of what he was doing. (That was one of their gripes with the Xerox service documentation: it didn’t explain *why* the fix resolved the problem, or even what the problem actually was. So it worked against the techs’ desire to seem professional.)

Their message was “I am a competent knowledge worker”, worthy of praise. The intended recipient of the message was the account manager who would be reviewing them based on no actual knowledge of their skills. So, for example, Todd might make a point of finding the account manager and explaining the problem, even though the account manager didn’t really need to know.

The emphasis on always displaying competence was so extreme that “If, for example, a technician should inadvertently go to the wrong machine at a customer site, the technician cannot simply walk away and go to the correct machine but must do some minor service before leaving.”

Here’s another example: Todd would be carrying commonly-used parts in his trunk. And if he thought he knew the problem from the description the phone operator took down, he might stop at a central store to pick up a part he thought likely to be useful. If, nevertheless, he discovered he needed a part he didn’t have, he’d try to hide that. He might do other necessary maintenance work on the machine until it was time for lunch. During the lunch break, he’d pick up the part, preserving the illusion that he’d had everything he needed all along.

I should note that while there’s showmanship to this, it’s not *fakery*. The qualities the technicians display – cleanliness, order, a systematic approach, preparedness, coherence of narrative – were the same qualities they thought *made* good technicians. They just made sure other people noticed the qualities they knew they had.

We in software are more likely to have bosses who used to do our jobs, but I think a lot of programmers are in the position of the techs: being judged by people who can’t judge what we do. We’re illegible. Software testers have it even worse: is this person not finding bugs because bugs aren’t there to be found? Or because many bugs are being missed?

Since we’re similar in illegibility to the technicians, it’s odd that – as far as I can tell – we don’t manage the perceptions of those around us. I’m not so much talking about shirt and tie – those days are over – but about manifesting virtues like being systematic, orderly, and disciplined.

I’m as blind to that as anyone is. In the early days of Agile, Agile was frequently called undisciplined - “you mean you just start writing code? without a design?”. Agile was frequently compared to “cowboy coding”. (I don’t know why cowboys are supposed to be such a bad example.)

Pushing back, I made a big deal about how Agile requires more discipline than conventional coding, and how techniques like pair programming and no code ownership were in part ways of enforcing discipline through peer pressure. (You couldn’t invisibly slack off - someone would see.)

But it never occurred to me that people should *present* as disciplined, not just *be* disciplined. I can’t account for the blind spot.

I wonder how this will play out in the coming age of remote work, when people are even less visible to their bosses and peers than before. Will it spur more of, as the religious saying goes, “outward and visible signs of inward and spiritual grace”?

Since I have no idea, let’s move on to a related issue.

There’s a saying that it’s horrible to have a job where you have responsibility but no authority. According to Orr, techs have an extreme version of that job.

They have responsibility for maintaining copiers so they don’t break, and fixing them when they do. The existence of a broken copier is *very* visible. While the tech is fixing it, people are waiting to be able to make their copies. Techs can never make people happy – they can only restore them to their default state of happiness, whatever that is.

Instead of “authority”, Orr uses the word “control”. In particular, technicians have no control over other people. They can’t keep people from making more copies per month than the machine is rated for. (Overuse is a major contributor to breakage.) They can’t keep sales from causing overuse by agreeing to lease a machine that’s too small. They can’t keep users from misuses like using originals with the staples still in them. They can invite users or operators to learn how to use the copiers correctly, but they can’t *make* them attend such meetings, and they generally don’t. Technicians aren’t consulted about the contents of the service documentation: that comes from technical writers channeling the engineers who design the copiers. They can’t influence manufacturing quality. And so on.

The techs *try* to have what influence they can. They have a saying: “Don’t [just]: fix the machine; fix the customer!” They try to woo operators, for example, into talking about the machines, so that the techs can teach them what to notice about the machine and how to explain problems – especially intermittent problems – in a way that’s useful. They teach them enough of how the technicians see the machine that the operators are useful to them.

But, generally, such efforts only have limited success. The techs are forced to acknowledge that their machines will be misunderstood and misused. To some extent, it’s the techs and the machines against the users. To another extent, it’s the techs against the machines. The machines, at least some of them, have what you might call personalities. Some machines just *like* to break, and it’s a triumph when you fix them despite themselves.

In this environment, where *everything and everyone* outside the technicians’ team is dangerous or at best neutral, it’s not surprising that techs form tight-knit communities. In the words of the song, “it’s you and me against the world”. That’s exacerbated because there’s not really a career path for technicians. You can become a “technical specialist” if you’re particularly good, in which case you’ll spend a lot of time helping other techs. But won’t be promoted, as far as I could tell from Orr, to work with engineers on designing more maintainable and fixable machines, or even to help writing documentation for technicians.

What’s the lesson here?

Let’s agree programmers and testers and tech writers often want to be involved in the upfront work: designing the external interfaces to features or creating stories. That should mean an *explicit* effort to woo product owners or user experience designers into understanding the right subset of the job. What about a feature makes it easy to program? to test? to write help text for?

And product owners and user experience designers should also seek to educate their peers. There was a brief flurry of colocated teams around the turn of the century. Dedicated team rooms made cross-functional learning more natural – something that happened as part of normal conversation, not something having to be scheduled. But those days seem to be gone. Some substitute ought to be found.

My final topic – my main topic, really – is war stories. Technicians tell war stories at lunch, before meetings, and when working together at a copier. Orr thinks they’re essential. He describes the team as “a socially-distributed resource diffused and stored primarily through oral culture”.

First, what is a “war story”? As a story, it has a main character (sometimes the speaker, sometimes someone who told the story to the speaker). It has a series of events that happened through time, often culminating in an Aha! moment when a problem is solved. It’s called a “war” story because it’s about an unusual situation or a seemingly-typical situation that takes a novel twist.

War stories have three purposes according to Orr. Sometimes it’s to help someone solve their immediate problem. Sometimes it’s to preserve hard-won knowledge by spreading it around. Sometimes it’s to amuse or otherwise cement everyone’s identity as “a technician”.

Let’s start with problem-solving, specifically diagnosis. Orr characterizes the difficult diagnosis as follows: “The question of interpretation is critical; technicians working on incomplete diagnoses often say that they believe that they know the crucial facts, but do not recognize them. The hard part is recognizing the significance of any given fact.” What’s required is that Aha! moment when the facts reconfigure themselves into a new, coherent story, meaning that it’s clear how any two facts relate to each other and you can tell a coherent causal narrative of the machine’s failure.

War stories are told to help change a tech’s perspective on facts they already know. Quote: “Some appear to be startlingly irrelevant until one realizes the point is not just to consider the symptoms but also to jar one’s perspective into focus.” But there’s a variety of stories. Some are used to eliminate suspects: a story of a previous diagnosis is told as a claim that *this* problem can’t be *that*. Others are stories that reinforce the need to think clearly, emphasizing how small can be the fact that leads to a solution. So one tech might tell another a story about a time when not noticing a minor machine noise led to wasted hours. That story specifically says “are you overlooking relevant noises?”, but the more general exhortation is that minor facts can have major significance.

Other stories might remind that a seemingly major fact might be irrelevant. For example, one copier visit prompted a story about an E053 error in the log that was misleading *unless* it happened to be followed by an F066 error (which might not happen for a long time). There wasn’t an E053 error in the copier in question, so the story seems pointless. But the story’s reminder of how the *real* clue was found in the DC20 log serves to remind the listener not to fixate and that logs can be wrong.

As technicians close in on a diagnosis, war stories can be used to firm it up. “Should a recommended course of action be thought improbable, the recommendation may be augmented by short accounts of how it worked for some other known technician. Similarly, if a suggestion of a possible cause for the problem is met with the protestation that that is simply impossible, the response is likely to be a story in which the problem [was] exactly that, despite the same set of objections.”

I have a war story like that. Once, in the ‘80s, I worked on what I believe was the first symmetric multiprocessor version of Unix. Previous versions only allowed one processor to execute the kernel (the operating system) at once. This new version allowed all the processors to be in the kernel at once. This introduces all the problems of concurrency: two processes accessing the same data at the same time. We used to joke that the development process was scattering locks throughout the code and debugging it into submission.

At that time, I was responsible for testing the kernel. I happened to have hacked the GNU C compiler to make it a test coverage tool, and I think it was the head of the multiprocessor effort, Gary Whisenhunt, who suggested that I implement a new kind of coverage: measure how often each C subroutine was entered by one processor while another processor was already executing it. Now, if you think about it, most concurrency bugs are not caused by that: it’s rather that one processor is in subroutine A while another is in subroutine B, and A and B share data. However, the so-called “race coverage” was still useful. Here’s how.

Because the coverage tool processed files in directory structure order, if you printed out the race coverage for all the subroutines, related subroutines would be shown together. I would take a big printout (on fan-fold paper) to Gary. He would flip through it and find subsystems where few files had been “raced”. That was a clue that the whole subsystem – all its subroutines – had been under-exercised. He’d point at a page of the printout and say, “I *know* there have to be bugs there.”

I would then go and modify my testing app, “churn”, to generate lots of processes that would bang away on that subsystem via the system call interface. It was great fun for me as a tester, because I only once failed to find crash bugs.

Once, I conceived of the idea of churning the system call that let you mount and unmount disks (think of plugging and unplugging a USB drive into all the available ports all at once, again and again and again). Sure enough, I found a crash bug.

That got me in trouble with what today we’d call the “Chief Technical Officer” because, really, on the big expensive machines of the day – or even the little ones of today – how often are people going to be mounting two disks at the exact same time. He, um, *suggested* that maybe I should focus on things that *could actually happen*. (The project was kind of high stress on the development side.) The bug was marked as having high severity but low priority, and it didn’t get fixed.

Some time later, a customer reported a crash. It was not a bug surfaced by simultaneous mounts, but the customer’s crash had the same underlying cause as my mount crash. Had my “impossible in real life” bug been fixed, the customer crash would have been avoided.

Back in the day, I used to tell this story to reinforce two points. First, that it’s fine to try unlikely scenarios when testing, but – second – it’s wise to then practice “failure improvement” by playing with the bug to see if you can make it more compelling. Very often, you can, and being able to do so is a mark of a really good tester.

A story like that is maybe a bit long to be told while standing over a machine. It’s more the sort of thing that would get told at lunch, or while waiting for everyone to arrive for a meeting. Technicians told stories in those situations, too. They were intended to both spread technical knowledge of ways copiers can fail, but also to reinforce the team’s image of how a good tech behaves. For example, a story might be told of the trouble a tech got into because he wasn’t orderly and systematic enough. Or a story might be told about how a tech handled a particularly ornery account manager.

Such stories shade over into celebrations of the unique culture of the technicians. They’re hero stories, stories of crazy customers, and the like. They tend not to be about particular techs so much as they’re about the *role*. They build community.

One interesting kind of such a story is the story of a catastrophe. For example, one tech told the story of how he accidentally set the copier on fire during a customer visit .

Here’s a catastrophe story of mine. I used to fly gliders (engine-free aircraft). Once there was a thunder storm coming in, but I wanted to get one last flight in. I was towed up by the tow plane and released, flew around for a bit below the cloud cover. There was good lift. Then I decide to land, so I pushed the stick forward to go down.

I kept going up. That was disconcerting. You do not want to get sucked into a thunderstormy cloud.

So I put on the dive brakes. Those are vertical surfaces that can stick up out of the wing and really spoil the lift.

I kept going up. This was, more than disconcerting.

I kept the dive brakes out and skewed the plane sideways to make it even less aerodynamic. That finally got the aircraft to descend.

At this point, landing seemed like an excellent idea. A usual landing has you traverse three sides of a rectangle. First you fly with the wind parallel to the runway but some distance off to the side. Once you get past the end of the runway, you make two right-angle turns to line up with the runway and land into the wind.

I started the first leg of the landing and called it in. The radio came on. “soaslkjslajsd”, it said. “Say again”, I said. “Sqlkjelkrjklkje” it said. I was already flustered, and this was not helping. Finally they got Christie, that day’s tow pilot, on the radio. Higher pitches are easier to understand through static, so I finally learned from her that the wind had flipped 180 degrees, so I was planning to land downwind, with the wind.

That’s not horrible. It’s something you practice. But it’s not ideal, because as the aircraft slows down, it’s eventually flying at the same speed as the wind, which means your flight surfaces can’t get any purchase, so you lose control. Then, when you slow down further, air is flowing backwards over the surfaces, so you have to work your controls in reverse – sort of like writing while looking only in the mirror.

So I did a 180, planning to do the usual three legs in the opposite direction. But I was *really* flustered now, so I flew too far on my downwind leg. When I turned my two right angles to land on the runway, I was too far away to make it. (No engine, remember?) So I landed in the cornfield past the end of the runway, what’s called “landing out”.

That’s my story, like the copier-on-fire story. Like that story, there were some lessons for the less experienced. They were, “don’t be too eager when a storm is moving in”, “don’t just assume the wind hasn’t changed direction: check the runway’s wind sock”, and “higher pitched voices are easier to understand”.

But there’s something else. The technician’s story about the fire began with, quote “Until you really break something good, you’re not a member of the team.” I was told the same thing about landing out. In fact, as the latest person to land out, I got temporary possession of the “Lead C”, a lead disk with the names of everyone who’d landed out engraved on it. And, indeed, there was the name of my instructor, and our vastly experienced main tow pilot. I was now one of the gang.

Oh yeah, this isn’t a podcast about my war stories. So what lessons might be here for software?

First is the usefulness of war stories to encourage the right kind of thought and behavior and comradeship. I get the impression that we tell war stories less than the technicians did.

Second, team-building happens when the activities or conversations are *about* the work. It’s not enough to go to lunch together or have “virtual happy hours” if you don’t talk about work. Be suspicious of retreats where you play bonding games involving falling backward or climbing trees or doing whatever it is that people do these days.

Another lesson is the importance of “slack”. Remember that the war stories are often told when waiting for meetings to start. Savvy managers let the stories extend past the scheduled start of the meeting, if they’re going well. Unsavvy managers are all about “efficiency” and starting on time. Be savvy.

Technicians are lucky, in a way, because they’re unscheduled. Sometimes there’s nothing to do, and people are hanging around waiting for something to happen. That’s when the experienced techs tell their stories.

We tend to be more tightly scheduled, but usually have the freedom to vary our processes, at least somewhat. So let’s explore. How could storytelling be merged into a standup? Suppose that people only *usually* do the whole “announce briefly what I did yesterday” thing. In the other times, brevity is sacrificed so that someone can tell the *story* of something exceptional and educational that they did or experienced .

Or, what if a retrospective were occasionally organized around stories? Telling the story of something… suboptimal that happened might be a way of making the issue more striking, more memorable, and encourage a feeling of not wanting to hear *that* story ever again. Indeed, a memorable enough story might in itself help keep it happening again. (This may be an existing retrospective method – I’m way out of date – but a quick search didn’t find anything like it.)

Let me know if you try anything like these. Remember that social.oddly-influenced.dev is a Mastodon instance just dandy for that.

And thank you for listening.