E2: Viruses, Cancer, TDD, and "Packages": Part 1

Download MP3Welcome to oddly influenced, a podcast about how people have applied ideas from *outside* software *to* software. Episode 2: How theories spread better when packaged with tools and mostly-routine procedures.

This is the second part of a three-part series on how people with very different motivations and understandings can nevertheless coordinate their work. It will be about Joan Fujimura’s notion of “packages”.

Embarrassingly, the topic of packages itself will probably require three episodes to cover. I’ll try to avoid any more nesting of parts within parts going forward.

Before I start, though, I want to give a shout-out to James Thomas, on twitter as @qahiccupps. (See the show notes for the spelling.) After listening to the previous episode, he asked this question: “I wondered whether you thought business value could be a boundary object between developers and the business?” The answer is No, and I’m kicking myself. I think that’s exactly the way to address my little rant at the end of episode one, so I recommend the thought to you.

My coverage of packages will start by setting the stage with some history of test-driven design (from now on called TDD). Then I’ll go into a fair amount of detail on how a particular theory of the cause of cancer achieved sudden and huge popularity in the 1980’s. I’m getting most of my information from Fujimura’s 1997 book: /Crafting Science: A Sociohistory of the Quest for the Genetics of Cancer/. I’m also using a chapter of hers in Andrew Pickering’s book /Science as Practice and Culture/, which has the advantage that there’s an online PDF you can read for free. See the show notes.

At this point, I have a confession. A lot of this episode is a history of tumor virology and recombinant DNA. I’m being self-indulgent, because I give more information than you really need to understand Fujimura’s idea. But I think the story is interesting, and it is a nice illustration of one of my hobbyhorses: how often progress is *contingent*, due to luck. That’s something a lot of software people don’t understand: it’s important to be the right person, but you also gotta be at the right place at the right time.

If you don’t want to understand the lonely quest of tumor virologists for relevance, I’ll tell you when you can skip the rest of this episode. I’ll summarize the key points in the next one, which applies Fujimura’s ideas to software.

Around the turn of the century, there was what I’d describe as a bandwagon around TDD. The core of TDD, to my mind, is that the programmer writes one test, watches it fail (because there’s no code to make it work), then writes the code to make it work, watches that test (and all previous tests) pass, pauses to think if there’s any cleanup to do that would make the code better in some way but keeps all the tests passing, then writes a new failing test.

I was a bit irked by the fantastic rise of TDD, as *I’d* written in an 1994 book that programmers should write their tests before their code, and I taught courses to companies based on the book, yet *I* didn’t get a bandwagon.

Why not?

Two reasons.

The first is that I completely missed the importance of the “write one test, then make it pass, then write the next test” feedback loop. I thought you should write all the tests first. That’s really not different than writing a rigorous specification or requirements document first, then writing all the code. And so I missed the point of TDD, which is feedback and reacting to change, and making steady, confirmed progress.

The second reason is that TDD happened to follow Fujimura’s recipe for success, and I very much did not.

Fujimura thinks that bandwagons happen in science when theories are combined with tools and procedures in a certain way. The theories are semi-vague, in that they can be adopted by lots of people because they’re compatible *enough* with those people’s own theories. But the tools and procedures are mostly-mechanical, in that they’re done mostly the same way in different situations, so the practical day-to-day work in different laboratories becomes aligned around those tools. Theories in some sense piggyback on tools.

Fujimura studied the bandwagon around the “proto-oncogene theory of cancer”. I’ll explain that later, but it’s essentially the theory of cancer you probably know today: cancer starts when some DNA in a cell gets damaged and causes that cell to begin dividing madly.

Fujimura’s research question was, quote, “How did a conceptual model like the proto-oncogene theory become accepted so quickly across disparate situations, across many laboratories, and even across different fields of research?”

*My* question is “How did a conceptual model like TDD become accepted so quickly across disparate code bases, across many teams, and even across business domains?”

I’ll now explain the proto-oncogene theory. I want to give my usual disclaimer – I might have messed up my explanation of the history or of the science – except doubled. I’m not a person who *gets* biology. The thing is, your body is a gross kludge, at both the macro and micro scales. NOTHING about it follows what we would consider good design principles. Everything biology is such a mess that I have trouble wrapping my head around it.

Now. This is the place where you can skip the rest of the episode.

So let’s talk about cancer. Our story is set in the 1970s and 1980s, but people studied cancer long before then. What was known?

They knew that there could be a genetic predisposition to cancer: certain families were more likely to get certain cancers.

Ever since people noticed that chimneysweeps seemed to get a lot of skin cancers, scientists knew that chemicals seem to cause cancer.

They knew that radiation - even sunlight - can predispose people to cancer.

AND we knew that viruses seemed to cause cancer. That is, some cancers seemed infectious.

However, until the 1970s, tumor virology was something of a backwater. The first viral cancer was isolated in chickens. If you transferred tumor cells from one bird to another, the second would likely develop a tumor – but only if it was a bird from the same inbred flock. If you bought a random chicken, even one of the same breed, it wouldn’t get a tumor. The infectious agent was later shown to be a virus.

The second viral cancer discovered was a mouse mammary gland cancer that could be passed from mother mouse to baby mouse: but only in one particular horrendously inbred strain of laboratory mouse.

These did not seem very significant. In particular, there didn’t seem to be any such tumor viruses for humans. (It turns out that we now believe that 10-20% of human cancers are caused by viruses, but that number came later.)

Still, people wanted to figure out how viruses work, and tumor viruses were a good thing to study because they tended to have extra-small genomes.

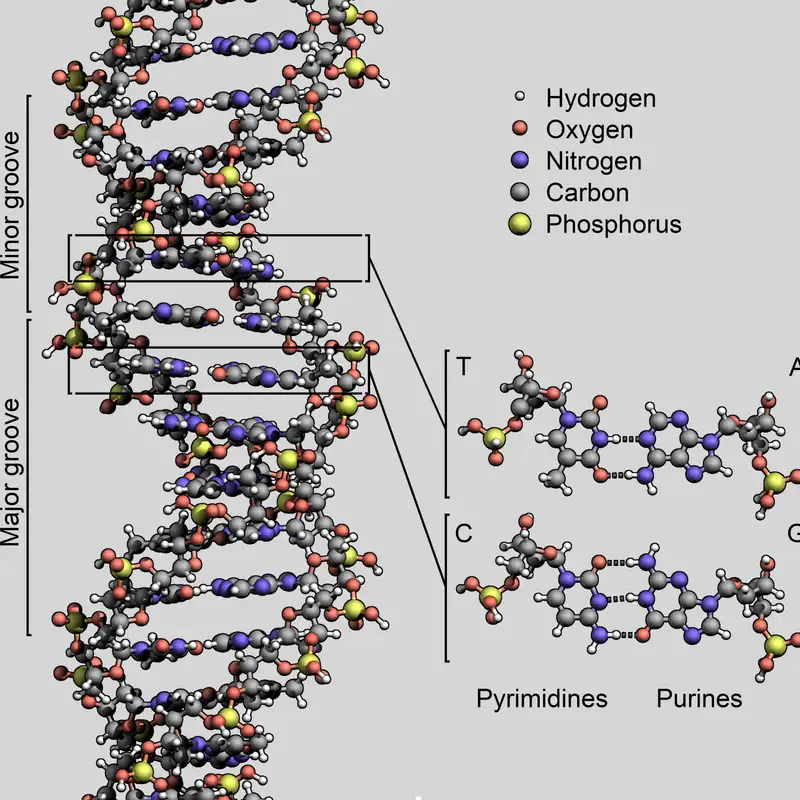

Remember: although the structure of DNA was revealed in 1953, it was hard to *do* anything with DNA until the 1970s. There were no gene sequencing machines. Figuring out a DNA sequence was something like debugging a huge software system without being able to look inside it. Instead, you would have to inject bug-provoking inputs, get the system in a bad state, then smash it into pieces and stick the pieces into a centrifuge to separate the them by weight, and then figure out the bug by looking at the distribution of pieces. Not the easiest thing to do. So you wanted to work on really short sequences.

A typical tumor virus would have only three genes: one to make the protein that forms the outside of the virus, one to make the internal structure, and one to make some enzymes the virus needs to get its own DNA grafted into the cell’s DNA. Once inside the cell’s DNA, the cell’s normal processing *may* use the viral DNA to make more of the proteins and assemble them into a new virus copy. (I say *may* because sometimes the RNA just sits there and never gets used to make proteins. And sometimes it makes enough virus particles that the cell gets destroyed. But sometimes – especially with tumor viruses – the cell keeps living, doing its thing, but also producing and shedding viral particles. Remember: all of this is a gross, interconnected kludge.)

But what makes a virus a *tumor* virus?

Suppose you’re a virus. You can infect a cell and get it to produce copies of yourself. But a typical cell just sits there, either not dividing into two cells or not dividing very often. You know what would be better? If you could turn that cell into a *cancer* cell so that it would divide like mad, each descendent cell *also* producing copies of yourself. And also producing more descendent cells. Exponential growth of virus factories! Now that’s winning, for the virus.

So, in the 1960’s, some people compared two strains of a virus: one that produces cancer and one that doesn’t. They discovered the tumor-causing strains’s DNA (RNA, strictly) weighed 20% more than the other kind. (Remember, we’re using centrifuges to deduce things based on weight.)

After further laborious work, they discovered that all the extra DNA was clumped together at the end of the viral genome. That looks like a new gene - a cancer-causing gene - which they called an “oncogene”. (Oncology is the study of cancer.)

That’s pretty cool, but remember: tumor virology is a backwater.

Meanwhile, people trying to understand how animal cells work had worked themselves down to ever simpler cases. Animal cells are called “eukaryotic”. Those are the cells with nucleuses and lots of complicated internal structure. Too complicated, it turns out, for the technology of the time.

It would be simpler to work with bacteria: smaller, less stuff in them, no nucleus, easier to keep alive, and so on.

Viruses are even simpler: smaller still, they’re not really alive to start with, so you don’t have to *keep* them alive, etc., etc.

An especially neat thing is that there are viruses - called bacteriophages or just phages - that specifically infect bacteria. Much easier to study than the viruses that infect eukaryotic cells.

(If you’ve seen the viruses that look kind of like the old Apollo lunar lander – a big head with a column below it and creepy spider legs attached – that’s a phage. You, by the way, have uncounted gazillions of phages in the lining of your gut, little legs sticking out to catch unwanted bacteria that you’ve eaten.)

So that’s a good pair of organisms to study: simple phages and the relatively simple organisms they infect. It suits the technology available. You can figure out the normal activities of a bacterium by disrupting those activities with a virus, with the eventual goal of speculating how similar activities work in a eukaryotic cell, which are the cells we care most about.

And the scientists studying all this discovered something neat.

Bacteria don’t like being infected by viruses, so they’ve evolved defenses against it. One is called “restriction enzymes”, so called because they “restrict the virus from doing its job”.

In the process of viral infection, there’s a strand of viral DNA floating around inside the cell, looking to merge with some cell DNA, then start quote-unquote “expressing” viral proteins.

Suppose you cut the viral strand in pieces. Then those pieces get grafted into the bacterial DNA. But if a piece isn’t a complete gene, it can’t be turned into a complete protein, and so the virus’s fiendish scheme has been foiled.

But there’s an even better trick. Instead of snipping the DNA at some random place, what if a restriction enzyme were tailored to recognize a sequence of DNA nucleotides (the letters of the DNA alphabet) that was in the *middle* of a gene. And then snip the DNA there. Then you’d be *guaranteed* a non-working gene.

It turns out that bacteria do exactly that. In fact, because there are so many bacteria, and so many phages, bacteria have evolved *a lot* of restriction enzymes that recognize a lot of different sequences.

Wait! said the scientists.

We have enzymes that can cut DNA at particular places? And we have other enzymes that can graft snippets of DNA into longer strands of DNA? So - if we’ve managed to snip out, say, the gene that produces insulin, and we can graft it into a common bacterium, and we can grow zillions of bacteria in huge vats, don’t we now have a better way to produce insulin - or any protein we want?

Thus was born recombinant DNA or genetic engineering.

It was also possible to use this technology to much more easily sequence DNA - to find the ATGCTTCGG sequences that make up a particular strand of DNA. It wasn’t *as easy* as it is these days, but it was still a huge step up.

Moreover, all this work can be done with enzymes that you extract from bacteria. It can be really hard to figure out how to extract the first example of a particular enzyme, but once you’ve done that, making copies of the enzyme can become closer to mass production than specialist work, so a lot of things have suddenly become much cheaper, faster, and easier.

Meanwhile, back in the backwater of tumor virology.

Two people (J. Michael Bishop and Harold T Varmus) had an idea. Spoiler: they got the Nobel Prize for this idea, so you know it’s going to be a good one.

They studied that virus that caused cancer in chickens. People generally assumed that the virus had itself evolved its oncogene somehow.

However, viruses – especially this kind of RNA virus – are very sloppy about replicating themselves within a cell. Bishop and Varmus wondered if some particular virus had actually picked up the oncogene *from* a chicken cell - that is, the virus stole it rather than created it. Then it was conserved in viral evolution because it helps the virus replicate.

The obvious retort is: what about that chicken cell? Why on earth wouldn’t evolution have eliminated such a gene from the cellular genome way before the virus picked it up? What would cause that gene to be conserved in a chicken?

Warning: looking backward, the answer seems obvious. Lots of things are obvious once someone else figures them out. Figuring them out yourself is a different story.

Bishop and Varmus had all these recombinant DNA tools available to them, so they did an experiment. Around 1975, they used a DNA probe to look for an oncovirus-like gene in chickens. The probe was derived from the oncovirus DNA, tailored to match any DNA sequence similar to the oncovirus gene. It had a radionucleotide tag so they could see if it latched onto a matching chicken gene.

They discovered one: a gene in a normal chicken cell that was close to the oncogene in the virus.

Within two years, they’d discovered the same or similar genes in lots of animals: including fish, primates, and humans.

Discovering that gene in fish was significant. Genes that appear in fish and in their descendent species have been around a *long* time, so evolution is clearly conserving them – finding them valuable.

Later, someone found a gene in a chemically-induced cancer that was *identical* to a viral oncogene.

So we have the proto-oncogene theory: there are genes in normal cells that are *almost* cancer genes. They start out doing something useful. Mutation, due to chemicals or whatever, flips them to variant genes that are carcinogenic. At some time in the distant past, a virus picked up one of those carcinogenic genes and became a tumor virus.

So the real action, when it comes to cancer, was in the normal cells, where so-called “proto-oncogenes” do their job but sometimes get turned into oncogenes. The viruses are really bystanders.

The useful thing proto-oncogenes do presumably had something to do with cell growth (since cancer is uncontrolled cell growth). After better technology was developed, people did indeed discover that proto-oncogenes code for various types of growth hormone.

So here’s an interesting thing. Bishop and Varmus were doing tumor virology, not glamorous, but there were a whole bunch of other labs doing developmental biology (how cells grow, how the original fertilized egg develops into skin cells, liver cells, and so on). Suddenly Bishop and Varmus present those people with not only specific genes, but a general theory, and *tools* people in those other labs can use to do the job they’re already doing, but better. Tools that it makes perfect sense for those other labs to adopt. They can borrow enzymes from the tumor virology labs. They can send their postdocs to conferences to learn about those tools and how to use them. They can send graduate students to go work in other labs that already use the tools and shadow the people who already know them.

The same thing happened in multiple subfields and multiple labs: it was a bandwagon that really took off around 1986. For example, lots of people were interested in diagnostic tools for cancer. Well, the probe that Bishop and Varmus used to find the first proto-oncogene looks a *lot* like a diagnostic tool to find if a particular gene is causing some person’s particular cancer.

So lots of funding poured into labs working with the consequences of proto-oncogene theory. For example, Sloan-Kettering was the oldest and largest pure cancer research institute. It flipped its research emphasis from immunology (seeing if the immune system could be taught to kill cancer cells like it kills invading bacteria and viruses) over to a focus on molecular biology.

The proto-oncogene theory had become universal.

In the next episode, I’ll summarize what Fujimura thinks this history says about how theories diffuse across science . And I’ll apply her theory to software. Stay tuned, and thank you for listening.