Galison’s /Image and Logic/, Part 1: The stickiness of experimental tradition

Download MP3Welcome to oddly influenced, a podcast about how people have applied ideas from *outside* software *to* software. Episode 8: Galison’s /Image and Logic/, Part 1: The stickiness of experimental tradition.

This episode is about Peter Galison’s 1997 book, /Image and Logic: A Material Culture of Microphysics/. Galison is interested in the machines that people have used since the early 1900s to detect and measure subatomic particles, the people who built and used those machines, and the people who collaborated with those people.

Galison has a number of conclusions relevant to us. I’ll present his major conclusion, about cooperation, next time. This episode, though, will be sort of the flip side of last episode’s discussion of why scientists change their careers. We’re going to talk about reasons why they *don’t*.

Galison’s history is organized around the conflict between two traditions of experimental particle physics, traditions he calls the Image tradition and Logic tradition. As an example that’s closer to home, I’m going to use the long conflict between fans of statically-typed and runtime-typed programming languages. This will let me speculate about some reasons why the book I started writing in February 2017, /An Outsider’s Guide to Statically Typed Functional Programming/ was so clearly going to fail that I abandoned it in December of 2018. Not that I’m bitter. Oh no. Not me. Not bitter. Not after *almost two years* of wasted effort.

The Image tradition started with Charles Wilson and his cloud chamber. He’d been working on it since the late 1800s. He started out wanting to replicate clouds, rain, and other atmospheric effects in miniature inside smallish glass chambers. He never really gave that up, but he also started using the same tools to investigate subatomic particles and the various quote “rays” that were being discovered around that time: X-rays, beta rays, alpha rays. (Only the first of these is actually electromagnetic radiation, but that wasn’t known at the time.)

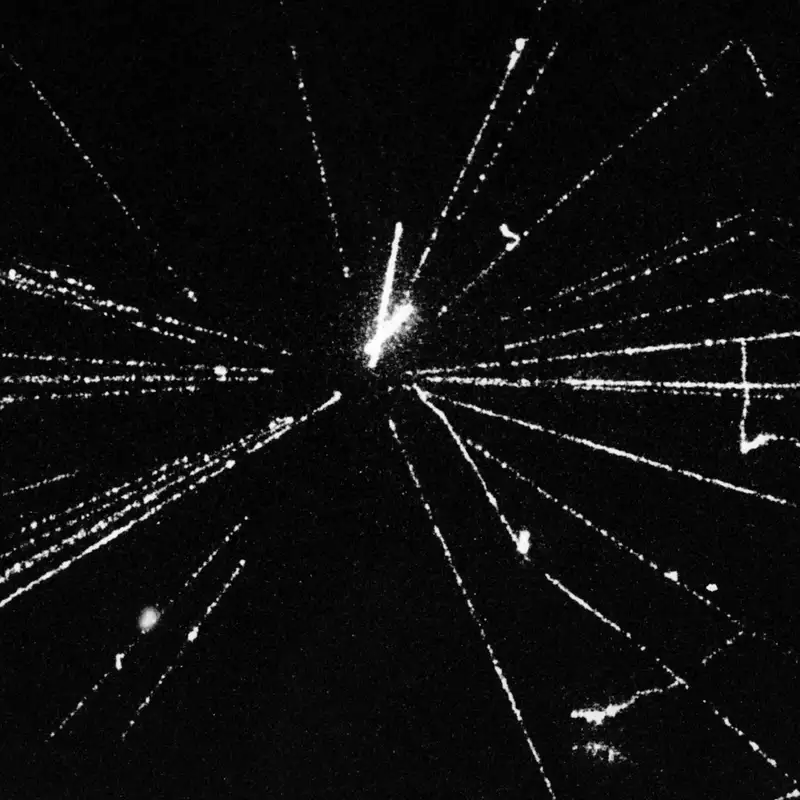

His 1911 cloud chamber represents an important milestone. It was a glass chamber filled with supersaturated alcohol vapor. X-rays, for example, could be shot into the chamber. They would ionize alcohol molecules (by tearing off electrons), and little cloud droplets would form around those molecules. They made tracklike structures.

When he used beta rays and alpha rays (which arise naturally from radioactive decay), they made much clearer tracks. Beta rays made thin, wispy tracks that often changed direction. Alpha rays made thicker, straighter tracks. This turns out to be because beta quote “rays” are single (very light) electrons. Alpha rays are helium nuclei, composed of two protons and two neutrons. They make a straighter track because they’re way heavier, harder to deflect. I *think*, but Galison doesn’t say, that they make a thicker track because they have twice the charge, so can affect more molecules along their path.

Cloud chambers are appealing because they produce *pictures* of physical entities. They are a direct, one-to-one representation of a particle traveling through space.

The *Logic* tradition is based on counts and statistics and, after World War 2, very fast timing circuits. The Geiger counter, invented in 1908, is an early example. It would produce electrical pulses when exposed to alpha rays and helped confirm the hypothesis that alpha rays were really Helium nuclei. However, as Geiger improved it to the point where it could detect single electrons (that is, beta particles), it fell out of favor. While it produced pulses when exposed to radioactivity, it *also* produced pulses when it was not. It looked like the more sensitive version was subject to misfires. For a good while, no one knew why that happened, and they couldn’t fix it, so the device became considered untrustworthy.

However, in 1928, Walther Müller discovered that the so-called “wild collisions” came from cosmic rays (which had been discovered a quarter-century before). Cosmic rays are high-energy protons or nuclei (like the alpha particle, but potentially heavier). That rescued the Geiger-Müller counter. As Galison puts it, quote “the Geiger-Müller counter went, without altering a single screw, from a device with a fundamental, poorly understood and bothersome limit of sensitivity to the most sensitive instrument in the cosmic ray physicist’s toolkit.”

But notice that conclusions that come from the counter are fundamentally statistical. Do you want to show that light can ionize gas molecules? You have to compare the quote “background radiation” counted when the light is off to the total count when the light is on, and make an argument that the excess is unlikely to be due to chance.

Or suppose that you have what you think is a source of charged particles but a single counter isn’t enough to show a statistically-significant effect. Then you can put two counters in sequence, pointed at the particle source, wired so that the second one only fires if the first one has just fired. It’s still possible that two different background particles will happen to hit the counters within whatever time interval you’ve decided counts as quote “just fired”, but it’s perhaps more likely that it’s a single particle passing through each detector in turn. Again, you turn to statistics to make that argument.

A famous example of the Logic tradition was the 1955 discovery of the anti-proton, a negatively-charged proton. The details are too hard to explain, but the gist is this. Theory predicted that slamming protons into a copper sheet would occasionally produce an anti-proton. However, for each anti-proton, you’d also get 62000 negative pions. The experimenters had to reduce that number enough that the anti-proton counts would stand out, statistically. They took advantage of the fact that the mass of a pion is considerably less than the mass of the hypothetical antiproton and so pions would be traveling faster. Their apparatus used five different counters of two different types, all wired in sequence. For example, counter S2 would only fire if counter S1 had fired between 40 and 51 *billionths* of a second before, the rough time it would take a typical antiproton to cross the space between the two counters.

And indeed, they collected statistical evidence that the anti-proton existed.

Their discovery was soon confirmed by somebody looking at a photograph. Which must have stung, given all the work they’d put into their apparatus, but the Nobel prize they got four years later probably took some of the sting out of it. Galison says this work quote “was widely seen as a triumph for the then-beleaguered logic tradition”. Why was it beleaguered? Because of the bubble chamber, which had had working versions for a few years at that time.

The bubble chamber had replaced the cloud chamber. Cloud chambers had fallen out of favor for two reasons. First, there was a limit to how big they could be made. Second, they were filled with gas. As physicists began working with higher and higher energy (and thus faster) particles, both of those things combined to make the cloud chamber less useful. To get good data about a particle, its entire track needs to be within the chamber. A particle starts fast and slows down as it ionizes molecules, eventually to a stop. But in a gas, a speeding particle won’t encounter enough molecules for that before it exits the rather small chamber. No endpoint, no data.

Bubble chambers are chambers filled with, typically, liquid hydrogen. As a liquid, the hydrogen has more stopping power, and bubble chambers can be made bigger than cloud chambers.

The bubble chamber was dominant through the ’60s, but their great virtue was also a serious flaw: tracks formed in the interior, and they were captured by cameras that used film. In 1968, Berkeley’s flagship bubble chamber produced 1.5 million images. And those pictures had to be processed by people, mostly women, often the wives of graduate students or postdocs, all by hand. The computer technology of the time wasn’t much help. It was used, but only after the women translated the image into data. They had elaborate work stations, with foot pedals that put holes in IBM punch cards, all kinds of labor-saving mechanical and optical aids, but still. Highly manual.

I’ll finish up the story of the Image and Logic traditions later. But let’s take stock.

So we have two traditions, both of which had their ups and downs. Here’s an interesting thing: people stuck with the tradition they started with. For example, Frederick Reines had 316 publications. Only one had anything to do with the image tradition, and even that one was comparing an image device to a logic device when it came to answering a specific question.

I want to describe some reasons that’s not surprising.

First, any tradition has a lot of lore associated with it. This lore is often disconnected from any theory of why a particular instrument works. For example, quote “You will see one physicist state that after filling his chamber he waits for the gas to get dirty – he says it works better then. Some other physicist will scrupulously purify his gas with a calcium oven to remove oxygen from it. Yet another adds a ‘quenching gas’ while another adds nothing at all. In the end people do what’s wise – they [copy] whatever those [people] are doing whose chambers work well – without trying to understand why.” Generally speaking, theories of why instruments worked were informal, not mathematically expressed, not experimentally demonstrated in anything but the loosest way.

A good chunk of the lore persists through changes in base technology. In the Image tradition, lore persisted from the cloud chamber era, into the fairly short era when tracks were caught directly on photographic emulsions, and into the bubble chamber era. That happened even though the *reason* bubble chambers worked was completely different than why cloud chambers worked.

The Logic tradition had the same “conservation of lore” as counters moved to radically different physical implementations. And as people used old circuits in new instruments.

If you’ve spent many years learning how to work within the Logic tradition, you’re not going to give it up just because Image is on an upswing. You’ll wait for the pendulum to swing back.

That sounds like a calculation bloodlessly comparing two expected futures. But that’s not how people work, not even scientists.

You see, the Image people *really liked* their direct representations of nature. If you see a track that’s just like a proton’s, but it curves in the opposite direction, that was *real evidence*. They understood there could be artifacts – all kinds of ways in which looks could deceive – so they’d often wait for several pictures before announcing they’d discovered a new particle. But there was a core belief that one single really good picture would be definitive. That the best evidence was a clear picture.

The Logic people didn’t buy it. They said that “Anything can happen once”. What you needed was statistical significance. A carefully set up experiment like the five counters that found the anti-proton.

In return, the Image people didn’t buy *that*. The statistical analysis of the anti-proton experiment rested on a chain of assumptions. The Image people didn’t trust results that could be – non-obviously – bogus if one assumption was wrong. Wouldn’t it be safer to use a device – the bubble chamber – that had no *experiment-specific* assumptions built into it?

We have two different ideas of “experiment” happening here, what Galison calls “head to world” and “world to head”. In the “head to world” tradition, you use your head to carefully construct situations that allow the world to express its subtle truths. That’s what the Logic tradition was about. In the “world to head” tradition, you make yourself ever more sensitive to the world’s self-expressed truths. As the Image tradition people did.

How do you bridge traditions that differ on aesthetics, on different standards of what counts as a compelling argument, on how the world should be? You mostly can’t. And the Image and Logic traditions mostly didn’t, until they had to.

Here’s another example. In the heyday of bubble chamber physics, someone from the Logic tradition said:

“Part of my background made me want to know what was happening in the experiment while it was happening. I felt that it was much better when exploring the unknown to know where you are so as to better plan where you can go, rather than after the journey to only know where you have been. [And it’s different in] the bubble chamber business, the physicists took hundreds of thousands of pictures which then had to be developed and scanned, and didn’t know anything about what was happening in the experiment until a year or two after the experiment was already over.”

Here’s a person choosing a tradition not because it’s best in some abstract sense, but because it best scratches his own personal itch. Logic people liked control. They clung to the idea of a physicist building his own apparatus, soldering iron in hand, long after the Image people had had to give that up. (Do *not* put a soldering iron anywhere near 400 liters of liquid hydrogen.)

So we have a multi-decade competition between two traditions, inhabited by two different types of people. This competition was good for them; it pushed both forward. Galison says, quote:

“While casting aspersions on the other, each side insistently tried to acquire the virtues of its rival; logic had statistics and experimental control and wanted persuasive detail; image had the virtues of being fine-grained, visible, and inclusive but wanted the force of statistics, and [of] control over experimentation”

(Though it’s worth noting that each side, when adopting a virtue of the other, reinterpreted it according to its own style. Some of the ways the Image tradition used statistics must have seemed bizarre and backwards to Logic tradition people.)

Nevertheless, the Image and Logic traditions did merge at the end. I don’t know that Galison would say this, but I think it’s because the Logic tradition basically won. Experimental installations that involved giant electromagnets got so expensive that the Image and Logic traditions had to collaborate. There weren’t enough particle accelerators to go around. And everything about electronics, especially solid state electronics (like the CCD image chip in your phone’s camera) got really cheap, so clever circuits allowed Logic people to produce 3-dimensional images.

There was a time when the Image and Logic traditions collaborated, uneasily and awkwardly, on the excellently-named Time Projection Chamber. That was a really cool device in which the Image tradition had most influence on the interior of a methane-and-argon-filled cylinder where two particle beams collided. But the detectors around the edges, including those that produced particle tracks, were in the realm of the Logic people.

But it seems the time of two competing approaches has passed. Modern-day experimenters just have a single “experimenter” tradition.

So, about that book of mine. It all came about because I don’t like JavaScript, and I had to write a front end for a web app. I chose to use Elm, a relatively new (at the time) statically-typed functional language that is, roughly speaking, a simplified version of Haskell that compiles into JavaScript. I’d been using Clojure for some time, and was starting to use Elixir as my default language, so I was perfectly happy with runtime-typed functional languages. I didn’t consider them earth-shattering, but I thought they gave me modest improvements in the speed and expressiveness and correctness of my programming. So why not use them?

I picked Elm partly because I thought I should give *static* FP languages a chance. Maybe I could get another modest improvement over, say, ClojureScript (a Clojure implementation that runs on the JavaScript virtual machine). In the end, Elm was pleasant enough, but it struck me as rather *too* simplified. That led me to consider PureScript, a front-end language much more like Haskell, as a possibility. And with that, I conceived a cunning plan.

You see, I was fortunate to have gotten into Ruby early, in 2001, which was before the first US conference devoted to it. I was also relatively early with Clojure, before *its* first conference (if the 2010 Raleigh conference was the first). Early-mover advantage had been kind to me in both cases. I thought I could do the same thing again with a new language, PureScript.

My plan for my new book was to spend a good number of pages on Elm, by way of easing people into the static-typing worldview, the lore of programming with immutability, lack of null pointers, and so on. Then, once Elm was finished, I’d discuss what it was missing. I’d add on new language features like better types and finer-grained control over changing the outside world. I’d justify and explain PureScript and its style.

I wasn’t looking to initiate some sort of Image&Logic-style Time Projection Chamber merger between two traditions. Without outside forces like those of particle physics’ – increasingly scarce resources and dramatically cheaper microelectronics – I thought software traditions had no reason to merge. Nor did I expect a Lakatosian “novel confirmation” like the ones in the previous episode, ones that would inspire big career changes. Like I’ve said, I was expecting to see only modest improvements.

But I anticipated being able to drain away people unhappy with JavaScript, and that those people would form a large enough niche for me, a consultant/training guy by trade.

The reason I gave up on my plan was that the PureScript community had a powerful immune reaction to my book and myself. The first inkling of trouble was a surprisingly strong backlash against using Elm as a bridge to PureScript. Because I’m old, it reminded me of Dijkstra’s broadside against Basic (an old introductory computer language). Quote “It is practically impossible to teach good programming to students that have had a prior exposure to BASIC: as potential programmers they are mentally mutilated beyond hope of regeneration.” Which I think is bogus.

But I think a similar attitude fed into the backlash that I experienced. What I’d missed was that Elm is *outside PureScript’s tradition*. The tradition that PureScript comes from values deep understanding via clear links to mathematics, maximally powerful abstractions, and elegance of solution. That is not Elm’s approach.

The idea of a learner who started out with Elm, perhaps even *stopped* with Elm, provoked a powerful aesthetic judgment among PureScript people. As strong as that of a Logic person not being allowed to adjust the experiment as it happened. Or an Image person being told that human pattern-matching of images, quote “doesn’t count” because it’s not statistical in nature.

I want to try to explain the aesthetic shock I imagine. If you look in the Elm standard library, you’ll find the usual: an array type, a dictionary type, a list type, and a set type, as well as less usual types like `Maybe` and `Result`. Every single one of those has a `map` function, which applies some chosen function to all of the contents and then wraps the result back up into the original type. For example, you could use `map` to negate all of a list of numbers. In mathematical terms, that means all of those types (list, etc.) are *functors*. But Elm *doesn’t even mention that*. And it does not let you write a function that is declared to work on *any* functor. Such a function would automagically wrap the result in the original type. If you gave your function a list, you’d get a list back; if you gave it an array, you’d get an array back. In Elm, you’d have to write a different function for arrays than for lists, and so on.

This is not the PureScript Way. This is not *in* the static FP tradition as I believe it’s developed over time.

Moreover, I would classify PureScript as coming from a head-to-world tradition. It’s important to get your thoughts clear, as a crucial early part of having thoughts. My book, not just Elm, stank of a different tradition. It was, just like the title said, *an outsider’s* guide.

I come from a world-to-head tradition. When I write books, I let motivating examples accumulate – seemingly endlessly – before I generalize. I’m particularly fond of taking a wrong conceptual path, based on a plausible misunderstanding, showing the bad things that happen, and then redoing the path. To someone from a head-to-world tradition, the delay in *getting to the point* – that is, explaining, *exactly*, what a functor or monad or a whatever is – must be infuriating.

Something I *know* infuriates a subset of people is that I will allow readers to believe something *untrue* if I judge full accuracy too early gets in the way of learning.

Here’s my key blunder: I thought Elm and PureScript came from the same tradition just because they have similar syntax and Elm is mostly a subset, feature-wise, of PureScript. They do not. Tradition does not hinge on such details, but on aesthetics, deep-set commitments to particular values, standards of argument, and the like.

Because of that mistake, my, um, contributions to the PureScript slack and other venues tended to be of the form “Elm is PureScript’s competitor. In order to become as popular as Elm, PureScript needs to grow in the direction of a ‘whole product’, complete with all the less glamorous bits. Elm has really good documentation, so let’s all get together and establish similar norms of good documentation.”

In the context of Galison’s book, what was I doing wrong?

1. First, I was using the rhetoric of competition, popularity, and success. Those are values that, I think, come from outside the PureScript tradition. In that tradition lies the Haskell slogan quote “avoid success at all costs”. That has a double meaning. The less obvious – but I believe primary – meaning is “be very careful about paying too high a cost, in terms of violating our tradition’s design values, just for popularity”. The secondary meaning is “avoid popularity, period”. That’s perhaps mostly self-deprecating, but the saying wouldn’t be clever if it didn’t gesture at a real aesthetic rejection, a suspicion that there *is* no route to mass popularity that isn’t “selling out” the tradition. I think that’s entirely valid. I listen to jazz musicians all the time who make similar choices with their careers . It was *my* mistake for taking so long to understand.

2. Second, I unintentionally communicated, “you in the Purescript tradition should adopt the values of *another tradition* because they’re *better* than your own.” Strangely, that message is never popular.

Those things would have doomed my project and my book, I think. But confession time:

As I realized that my bet on PureScript was not likely to pay off, I did – self-indulgently – let my frustration show. Frankly, I became a jerk on the slack and twitter. I do regret that, and I apologize for it. But I think I’d have failed even if I were way more persuasive and diplomatic than I am. Structural factors – factors Galison lays out – were against me all along.

Anyway, I made /An Outsider’s Guide to Statically Typed Functional Programming/ free when I gave up. The PDF is available through Leanpub, and I think the 400 pages I finished are actually a pretty good coverage of Elm and some of the lore around it. There’s a link in the show notes.

In the midst of my “let’s all document!” push, I also started a book called /Lenses for the Mere Mortal/. Lenses are a neat concept and library ruined by mind-bendingly *atrocious* documentation, in both the PureScript and Haskell versions. At least that was true in 2018. So I wrote the book to help me understand the software. It’s not as good as the other one, and is incomplete in some important ways, but it does also have the virtue of being free.

I hope this navel-gazing analysis of one of my failures is not *just* cringe. I hope it also gives you some ideas of how Galison’s descriptions of intellectual traditions might be applied to your own projects.

Next time: “the trading zone”. And, as always, thank you for listening.