E32: Foucault, /Discipline and Punish/, part 3: expertise, panopticism, and the Big Visible Chart

Download MP3Welcome to Oddly Influenced, a podcast about how people have applied ideas from *outside* software *to* software. Episode 32: Foucault, /Discipline and Punishment/, part 3: expertise, panopticism, and the big visible chart

This final episode on Foucault’s /Discipline and Punish/ (supplemented with Prado’s /Starting with Foucault/ and a few other sources) is about one group of mind-molding techniques associated with the idea of expertise and another that Foucault calls “panopticism”.

Foucault was on one side of the “science wars” of the 1990s, which made the sniping back and forth between advocates of dynamic and static typing seem a calm and disinterested search for truth. There were, roughly, two sides: the “social constructivists” and the “realists”. The social constructivist side of the argument can come in two forms, which I’ll call the “can’t we all get along” form and the “edgelord” form. In this episode, I’ll explain the “can’t we all get along” variant of Foucault. If you’re interested in the edgelord version, together with some commentary that I haven’t seen elsewhere, drop me a line at marick@exampler.com or via Mastodon. The show notes have links for how to contact me. If you want a balanced discussion of the issues at play in the science wars, written by someone with way more credibility than I have, I recommend Ian Hacking’s /The Social Construction of What?/.

≤ music ≥

In this period around 1800, armies and factories were wildly successful in large part because they had well-developed opinions on how to drill people, what microtasks to drill them in, and what successful task performance looked like. That is, they had experts in bodily movement.

Prisons needed their own experts, but they had a harder problem. You can tell how well a soldier marches by watching him do it. The same is true of a student practicing penmanship or a factory worker sharpening the tips of pins. But you can’t directly observe what prisons were trying to create: industriousness and what Foucault calls “docile bodies”. That is, you can observe whether the prisoner behaves in an industrious or docile manner *within the highly constrained environment of the prison*. But it’s your job as, say, prison warden to know whether that’s just “going along to get along” or whether it signals a true, fundamental change in the prisoner’s character, one that will last after release. A lieutenant doesn’t care whether soldiers are marching *sincerely* or *skeptically*, just that a column of troops pivots flawlessly when commanded to do so. But skeptical prisoners are going to go right back to crime when they get out.

Worse, unlike factories and the army, prison wardens didn’t get to pick their trainees; trainees picked themselves, by doing a crime and getting caught. Moreover, wardens couldn’t expel someone who wasn’t measuring up. Just the opposite in fact: some sentences were indefinite, so wardens had to keep a prisoner until they *did* measure up.

So: a lot of work to do. Expertise about the mind of the prisoner and how to influence it had to be developed, more or less from scratch.

One thing experts do is standardize. Sometimes that’s conceptually straightforward. For example, during period Foucault was studying, France got fed up with everyplace having widely varying definitions of measures like “pint”, so experts were enlisted to come up with the standard liter, meter, gram, and so on. Other kinds of expertise are more subtle. Dairy cattle are sometimes judged by whether they have “good dairy character”. The Mississippi State University Extension defines that as having, “a great deal of angularity and openness, freedom from coarseness, […] cleanness throughout, a long, lean neck, sharp withers with no evidence of fat”, and so on. Notice how many of the components are subjective, which makes obtaining expertise harder, usually requiring the kind of apprenticeship described in episodes 20 and 21. Because of that, my wife, who has treated 5000 or more dairy cattle in her career, has only a lay understanding of dairy character. She hasn’t needed more.

Similarly, Foucault claims that, prior to the 1700s, there was no need for any kind of precise idea of a “normal person”. Since prison experts had the job of creating better people, they created the reference standard normal person. For the first time, experts were able to describe and rank people according to their degree of normality, much like a cattle judge can rate a cow as a 73 on the Purebred Dairy Cattle Association Score Card. This was a new way to be a human being in society.

Experts also invented the paper trail that we now just assume follows each person throughout their life:

“For a long time ordinary individuality – the everyday individuality of everybody – remained below the threshold of description. To be looked at, observed, described in detail, followed from day to day by an uninterrupted writing was a privilege. The chronicle of a man […] formed part of the rituals of his power. The disciplinary methods reversed this relation, lowered the threshold of describable individuality and made of this description a means of control.”

As my high school guidance counselor warned me at least once, “That could go on your permanent record.” Prior to around 1800, exercising power over someone’s future actions by what you write on a piece of archived paper just wasn’t a *thing.*

In classic expert style, these new experts on the mind of the prisoner both lumped and split. They lumped people into conceptual categories that hadn’t existed before. The new category of “habitual offender” or “delinquent” contained people who had to be treated differently than “first offenders”. And the experts split by making distinctions that hadn’t been made before, and theorized what such newly-studied distinctions in behavior meant about the mind. They also split expertise in the way that always happens, where different kinds of experts attend to similar data to make different interpretations for different purposes. Some things are studied by both chemistry and physics, but the two study them in different ways. Similarly, we advanced from having the single generic expert on the understanding and molding of prisoners to having different expertises for – quoting Prado – “judges, lawyers, coroners, police, parole boards, prison guards, forensic psychologists, counselors, reformers, and support groups.”

Foucault claims that, prior to around 1750, people didn’t generally think of the mind as having any interesting interior structure. This example is mine, not his, but consider the emotions. Since forever in the western world, the theory was that emotions were caused by the four bodily liquids: blood, phlegm, yellow bile, and black bile. It’s phlegm that causes you to be calm or phlegmatic, it’s yellow bile that causes you to be angry or choleric. The word “sanguine” comes from the Latin word for blood because the blood is the cause of positive emotions. What we consider part of the mind, emotions, people once knew was caused *in* the mind by something from its *outside*.

People hadn’t cared about the interior structure of the mind because they hadn’t needed to. Now the prison experts did, so the mind became an object of study, with the practical application being to make prisoners become more disciplined, industrious, and docile. And those practical uses provided data that was used to create more knowledge, with further practical applications in what Foucault describes as a positive feedback loop between power and knowledge.

And knowledge of the mind was applied outside of prisons, to hospitals, factories, insane asylums, and so on. What Foucault calls disciplinary methods spread throughout society – for example, the ritualized examination as a way of indirectly deducing skill grew widespread, to the point where, today, zillions of tech companies make programmers interviewing for jobs do silly things like writing an algorithm for reversing a linked list on the whiteboard. We do that not because it works at knowing how good a programmer someone is – everyone knows it’s iffy for that – but because the ritual of examination is so embedded in society. Examinations are *what we do*, and the need to have *some* examination is stronger than whether a particular examination serves a purpose.

For Foucault, all these methods created the disciplines of psychology and sociology, and those changed people’s whole way of talking and thinking about themselves and their place in the world. The experts told our ancestors about the mind, and our ancestors *believed them*. Foucault calls that “the creation of the subject”, which is another play on words. “Subject” here means, first, a person subjected to the discipline imposed by power, and – second – that the working of that discipline created a new kind of subjective experience.

That seems plausible to me. Consciousness is the mind thinking about itself. Just like, when you have a baby, suddenly you see babies everywhere, when your society tells your mind it has certain properties, it’s going to see them in itself. As Michael Feathers once quipped, “once I learned about availability bias, I started seeing it everywhere”.

I find Foucault’s progression somewhat unclear, but I think he’s saying something like this as the big picture: the attempt to produce industrious and docile people was primarily inspired by the factory, was applied to other situations (like the schoolroom and especially the prison), and perhaps reached its peak in the management of prisons. It didn’t work for creating industriousness, but the various disciplinary methods did produce docile bodies. Reforming the mind sort of dropped off the agenda in favor of just controlling the body. Remember how I said that the warden had to worry whether the results of prison discipline would “stick” when the prisoner was released? Well, that worry goes away when the disciplinary methods are *everywhere*, if there’s never any escape from them. As early as 1772, Guibert wrote, “Discipline must be made national. The state that I depict will have a simple, reliable, easily controlled administration. It will resemble those huge machines, which by quite uncomplicated means produce great effects; the strength of this state will spring from its own strength, its prosperity from its own prosperity. Time, which destroys all, will increase its power.”

The irony is that discipline, as its focus shifted ever more to just control of the body, in fact *did* change the mind. Was the change large enough to be a new *kind* of subjectivity, as Foucault claims? I dunno. How many grains of sand do you have to drop, one by one, onto a spot before you can legitimately call the result a *pile* of sand? At what point does a list of new kinds of subjective experience turn into a new *kind* of subjectivity? Who cares?

≤ music ≥

Now we come to panopticism, a term derived from a pamphlet written by the philosopher Jeremy Bentham. Its title was: “Panopticon; Or, The Inspection House: Containing the Idea of a New Principle of Construction Applicable to Any Sort of Establishment, in Which Persons of Any Description Are to Be Kept Under Inspection; And In Particular to Penitentiary Houses…” followed by 44 more words that I’ll spare you.

Despite being “applicable to any sort of establishment”, the Panopticon came to be mostly associated with prisons, because Bentham seized on a decision by the British government to use Irish prisoners as guinea pigs for a penitentiary system (that is, a system of solitary confinement modeled after monasteries). He thought he had a general-purpose idea, but prisons were where the funding was.

The idea of the Panopticon was originally developed by Jeremy’s brother Samuel for his (Samuel’s) manufactory in Russia. He had inspectors who would keep constant watch over the workers so that they wouldn’t slack off or otherwise misbehave. But he was forced to have more inspectors than he wanted. (Side note: The employers and governments of this age were the most *astounding* cheapskates when it came to paying for labor. It was as if cutting payroll were a religious calling. Kind of like it is for tech company shareholders here in 2023.) Anyway, Samuel’s idea was to station an inspector in such a way that that inspector could see all the workers but they couldn’t see him. Samuel reasoned that, since workers couldn’t tell if the inspector was looking at them, they’d have to behave as if he very probably was. The power of mass observation, at greatly reduced cost.

It’s probably worth noting that Samuel’s idea was never put into practice. Jeremy later wrote: “the sudden breaking out of the war between the Turks and the Russians […] concurred with some other incidents in putting a stop to the design.” And Jeremy’s elaboration of the idea – the one for the prison – never got built either. (He didn’t end up getting the funding.) Panopticon variants were built, though. There’s a neat one in the Netherlands that’s currently being used to house Ukrainian refugees. There’s a link to a podcast about it in the show notes.

So, what was the “simple idea in Architecture” that Bentham thought would produce “Morals reformed – health preserved – industry invigorated [etc. etc.]”. Because I love the writing style of this period, I’ll inflict on you just a bit of Bentham’s specification for the Panopticon:

“The building is circular.

“The apartments of the prisoners occupy the circumference. You may call them, if you please, the *cells*.

“These *cells* are divided from one another, and the prisoners by that means secluded from all communications with each other, by *partitions* in the form of *radii* issuing from the circumference toward the center, and extending as many feet as shall be thought necessary to form the largest dimension of the cell.

“The apartment of the inspector occupies the centre; you may call it if you please, the *inspector’s lodge*.

“It will be convenient in most, if not all cases, to have a vacant space or *area* all round, between such centre and such circumference. You may call it if you please the *intermediate* or *annular* area.”

Basically, it’s a multi-story donut-shaped building, with a tower standing in the center of the donut. Each cell has a slice of the donut. The outer and inner walls are mostly window, so that the interior of the cell is brightly lit and clearly visible from the central tower. The side walls of the cells isolate the prisoners, one from the other.

The tower (if you please, the inspector’s lodge) is also mostly window, but it’s dark inside so the prisoners can’t tell if the inspector is watching them. They’ll have to assume he is. That gives the inspector the *power* of continuous observation without the reality. (Bentham goes into a lot of detail about how to trick prisoners into thinking they’re being watched more than they are, but I admit: I probably wouldn’t have funded this either.)

Because the Panopticon was never built, you can’t really say it ever did anything, but Foucault found it a dandy metaphor. His reasoning goes like this:

Parents and factory owners alike know that watching children or workers dissuades them from breaking the rules (or, more fancily put, from violating norms).

The Panopticon is premised on the idea that just as good as watching someone is to induce them to *believe* they’re being watched. You don’t actually have to watch them all the time.

In fact, you don’t have to watch them at all if you can induce them to *watch themselves*. The function of the permanent record, and examinations, and all the rest of the disciplinary methods is to teach them – us, really, all of us – what to watch *for*. These are the norms that – and this will sound weird – are driven by power. I used that phrase because Foucault doesn’t conceive of power as something that belongs to people – it’s more that people belong to power. Prado puts it this way: “[Power] is not anything anyone has or controls, and it serves no ends or goals.” So how then does power *drive* the internalization of norms? I think of it as something like the slime mold that generated the Tokyo subway map. The following is from National Geographic:

“A group of researchers […] placed [a slime mold] in a petri-dish scattered with oat flakes. The position of food scraps was deliberately placed to replicate the locations of some of the most visited sites in Tokyo. In the first few hours, the slime mold’s size grew exponentially, and it branched out through the entire edible map. Within a few days, the size of its branches started to shrink, and the slime mold established a complex branching network between the oats on the petri-dish. Despite growing and expanding without a central coordination system like the brain, the mold had re-created an interconnected network made of slimes that looks almost exactly like the efficient, well-designed Tokyo subway system.”

I recommend the time-lapse video.

So, the slime mold created a map, but that was not its *purpose*. Its purpose was to sustain itself by distributing nutrients throughout itself. It originally made something of a mess, then narrowed that mess down to a near-optimal structure. Similarly, power acts to sustain itself. By implanting norms in people and having them self-observe, power sustains itself in an efficient way without relying on anyone intending anything.

I could say more but that would get into edgelord territory. It’s enough to say that this self-observation for particular norms is part of what Foucault means by the “creation of the subject”.

≤ music ≥

Finally, we get to voluntary panopticism in Agile teams!

There used to be a tradition in Agile – I think it’s mostly gone away – called “Big Visible Charts” or “Information Radiators” (that last coined by Alistair Cockburn). In an article, Ron Jeffries summarizes them thusly: “Display important project information not in some formal way, not on the web, not in PowerPoint, but in charts on the wall that no one can miss.”

I have two examples. One is an actual chart, one is not.

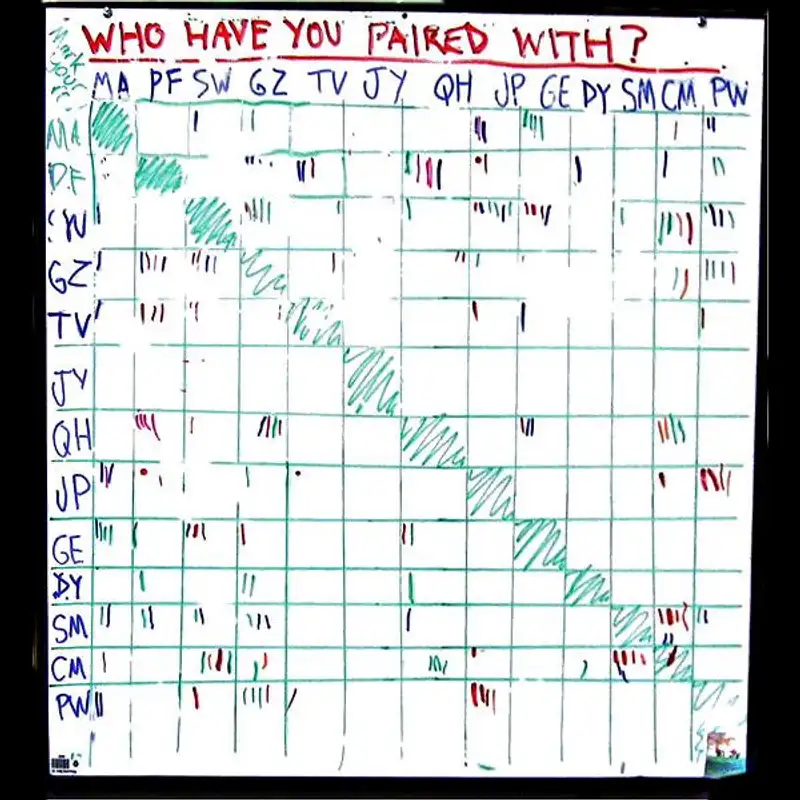

The first is the chart that’s the custom image for this episode. I can’t do better than quote the description from the now-defunct bigvisiblecharts.com:

“At one of our regular retrospectives a few iterations back, the team decided that we had a bad habit of not rotating our pairs enough. More specifically, we tended to pair with the same few people for all of our tasks.

“Perryn Fowler was charged with trying to rectify the situation, and he drew this chart up on a whiteboard shortly afterwards.”

The image is a 2D chart, a grid, titled “Who Have You Paired With?”. Both the X and Y axes are labeled with people’s initials.

Quoting again:

“The idea here is that you put a mark in the square that intersects your initials and your pair’s. We didn't enforce any rules other than that. Instead, we just let people come to the realization that they were filling in some squares a lot, and others not so much.”

I think you can describe that like this: the team as an entity knew they *should* have a habit (or norm), realized they didn’t, and made sure that they themselves (not a boss who tells them what to do) observe where they’re falling short. Nothing more was required to make the team adjust their behavior and – Foucault might say – make them into more of the sort of “subjects” who naturally swap pairs more often.

That fits with Foucault’s description of panopticism (if you allow me to think of the team as an entity somewhat distinct from its individual members – which I think is justified for any team worth the name and something we don’t balk at doing for, say, high-performing sports teams). And, since it’s not imposed by an outside force, it’s: voluntary panopticism. (An edgelord Foucault might say it was *actually* imposed by the impersonal operation of power, but… meh. Too abstract for me.)

≤ short music ≥

The second example is the brief fad to hook up lava lamps to build servers. This was before the existence of the compute-cloud. Teams typically had a dedicated machine – the build server – that idled away until someone committed a code change to the version control system. The build machine would then wake up, build the new system, and run all the tests against it. You didn’t want any tests to fail because that meant you’d introduced a bug. Because bugs are bad, fixing the problem was supposed to be the highest priority whenever the build failed any tests.

Alberto Savoia was the founder of a small software company. He noticed that fixing a broken build *wasn’t* the highest priority. That meant that other programmers might build upon the broken code, which would cause all kinds of confusion since there was no stable baseline.

So Alberto hooked up the build server to two lava lamps, an intermittently popular technology that has globs of colored wax in a roughly cylindrical, transparent container that’s filled with some sort of colored liquid. There’s a heat source in the base. Heated wax is more buoyant, so globs of wax would float up to the top of the lamp, where they’d cool down and then descend.

Drugs make it more interesting.

In Alberto’s setup, passing tests (the normal state) had the green lava lamp turned on. When a test failed, the green lamp was turned off and the red one was turned on. Since the lava lamps were in the middle of a large U-shaped table configuration, all the programmers could see them. The lamps *literally* radiated information.

It turns out that lava lamps take about 15-20 minutes to get warm enough for the wax bubbles to start floating upward. It became a competition among programmers: as soon as the red lava lamp turned on, the person who'd broken the build really really wanted to fix it before the bubbles started rising.

Another example of the power of visibility. Everyone knew broken builds should be fixed quickly. People rarely did it before the introduction of a widely-observable signal that a completely irrelevant deadline hadn’t been met. Looking proficient to our peers is such a powerful driver.

In remote work, you can’t have a physical chart visible to everyone, though I suppose you *could* install a lava lamp in everyone’s home office. But I think it’s worthwhile for both on-site and remote workplaces to think about how they can make information be *seen* not just be *available*.

That is, information radiators are *not* project dashboards on a webpage that you have to specifically intend to pull up. I can’t put it better than Marc Kalmes did in response to me on Mastodon:

“At a previous company, we used stickies for our product- and sprint-backlog. We had writable walls and used them for burn down chart and velocity over the last n sprints. Today, all I see is the burn-down chart in Jira. If I had to guess, we look at the chart in Jira once or twice during the sprint (fourteen day cycle). At the previous company: Every finished story was tracked at the burn-down. We looked multiple times per day at that chart.”

The point is not that information is *available*. It’s that it *radiates*. A good part of what I take to be the Agile ethic is not to have such a high opinion of ourselves. We can’t rely on us to consistently make good decisions, so – in our better moments – we create work structures that will *push us around* in our weak moments. That’s one of the reasons Extreme Programming is better than Scrum (except for the “getting widely adopted part” of “being better”): XP’s specific techniques were put together with human frailty in mind. Part of the point of pair programming was that it’s harder for two people to agree to skip a test or a refactoring than it is for one person to. The old practice of code ownership was discarded in favor of anyone being able to look at – and change – my code at any time. Just as with the Panopticon and parenting, the idea that *someone is watching* reduces bad behavior and leads to the unconscious and inevitable adoption of norms.

≤ short music ≥

Well. I could have probably done an episode on Big Visible Charts and the virtues of XP without all the foofahrah about Foucault. But *something* about those two things made them less “sticky” than lesser approaches. So maybe ladling a huge helping of Foucault over them will help them regain a little lost traction.

In general, I find Foucault an interesting case. He argues against so-called “whiggish history” which is the tendency to write history as an inevitable progression toward a given end, the way things are today. In its original form, it meant a history of England that showed a set of forces pushing consistently toward parliamentary sovereignty, personal liberties, and progress in general. Foucault’s project was to show that what pushes history around are forces that change over time, often seem fairly trivial, and combine in unexpected ways. He’s echoing the old saying, “God is in the details”, meaning that details *matter*. That’s why his books, which are actually philosophy, read like history: full of details and specific quotations.

But then he ruins it all by drawing radically simple conclusions with pleasing boundaries. Power *is* a graph with only one kind of arrow that points to other arrows. Power is *the* driver of knowledge, and knowledge is *for* increasing power. Epistemes or worldviews are wholesale: they aren’t modified, they’re *replaced*. So is our internal subjectivity: replaced.

Okay… But what happened to the importance of details?

The problem is that this is all so abstract that you can’t *do* anything with it. Power is all about actions acting on other actions. Fine. Now what? It’s like programming on a bare Turing machine, or doing math using only Peano’s axioms, or doing biology using the Standard Model of particle physics. That’s not what people do. People write programs before they learn about Turing machines. One of the best classes I took as an undergraduate was one that started with Peano’s axioms (or some similar axiom system) and spent the entire semester building up abstract algebra one definition/theorem/proof at a time. It’s a suspiciously convenient memory, but I swear the professor – who’d been teaching this class *forever* – finished proving the Fundamental Theorem of the Calculus just moments before the bell rang to end the final class of the semester. All the way from nine axioms to *that*, step by step. It was *awesome*. But it’s also *not* the way math developed: people built the upper stories before they built the foundations. And the distance between biology and particle physics is so vast that I’m sure there’s not a single working biologist who needs to know anything about particle physics.

As far as I can tell – I could be wrong – no one is building up from Foucault to create usable systems of ideas, and no one is building down from usable ideas to show how they can be grounded in Foucault. I don’t have any ideas along those lines either. That leaves Foucault serving an essentially negative purpose: to remind us that our stories of how we got here – wherever “here” is – are oversimplified. As, I suppose, are our stories of why we *didn’t* get *there* – like why Extreme Programming and Big Visible Charts fizzled. But even a properly complex story of that wouldn’t, I think, rely on Foucault’s too-abstract abstractions.

So, it turns out these episodes haven’t been useful. That’s always a risk of my style, which is to come in with an idea or five, even a complete outline, then learn what I *really* think in the process of writing the script. My hope is that the sort of person who listens to this podcast still finds these episodes interesting or entertaining. In any case, thank you for listening.