E43: The offloaded brain, part 3: dynamical systems

Download MP3Welcome to Oddly Influenced, a podcast for people who want to apply ideas from *outside* software *to* software. Episode 43: The offloaded brain, part 3: dynamical systems

I’m still working on an episode about applications of ecological and embodied cognition for conventional programs, what with their data structures and algorithms and input events. But I think it’d be wrong not to cover how some of what I’ve been calling EEs think behavior should be described: which is via *dynamical systems”.

I’m taking the examples in this episode from Anthony Chemero’s /Radical Embodied Cognitive Science/.

One warning: I can think of no way to apply these ideas from *outside* software *to* software, so consider this episode as mostly descriptions of neat experiments.

≤ music ≥

Here’s Chemero’s description of dynamical systems:

“A dynamical system is a set of quantitative variables changing continually, concurrently, and interdependently over time [in a way that] can be described as some set of equations. […] Cognitive scientists ought to try to […] model intelligent behavior using a particular sort of mathematics, most often sets of differential equations. Dynamical systems theory is especially appropriate for explaining cognition as interaction with the environment because single dynamical systems can have parameters [from] each side of the skin.”

The example everyone uses of a dynamical system is Watt’s centrifugal governor for steam engines, so I will to. A main use for early steam engines was pumping water out of coal mines. Power for that was delivered via a rotating flywheel. You wanted it to rotate at a steady rate, even if the workload or the temperature of the boiler changed. The speed could be controlled by a throttle. If you wanted, you could pay someone to open the throttle wider if the flywheel slowed down, or do the reverse if it sped up. But it turns out the owners of coal mines preferred not to.

Watts’ way of doing away with an operator was clever. He attached a vertical spindle to the flywheel. Attached to *it* were two arms with heavy balls on their ends. The arms were attached with hinges. If the spindle wasn’t turning, the arms would point straight down. But the faster the spindle spins, the more the arms raise up – via centrifugal force – until they could be completely horizontal.

The arms are also attached to the throttle. If the spindle begins to spin more slowly, the dropping arms will open the throttle wider, and the reverse happens if the arms rise toward the horizontal. There’s a screw that controls the relationship between arm angle and throttle. You fiddle with that until the governor keeps the spindle (and thus the flywheel) spinning at a constant rate.

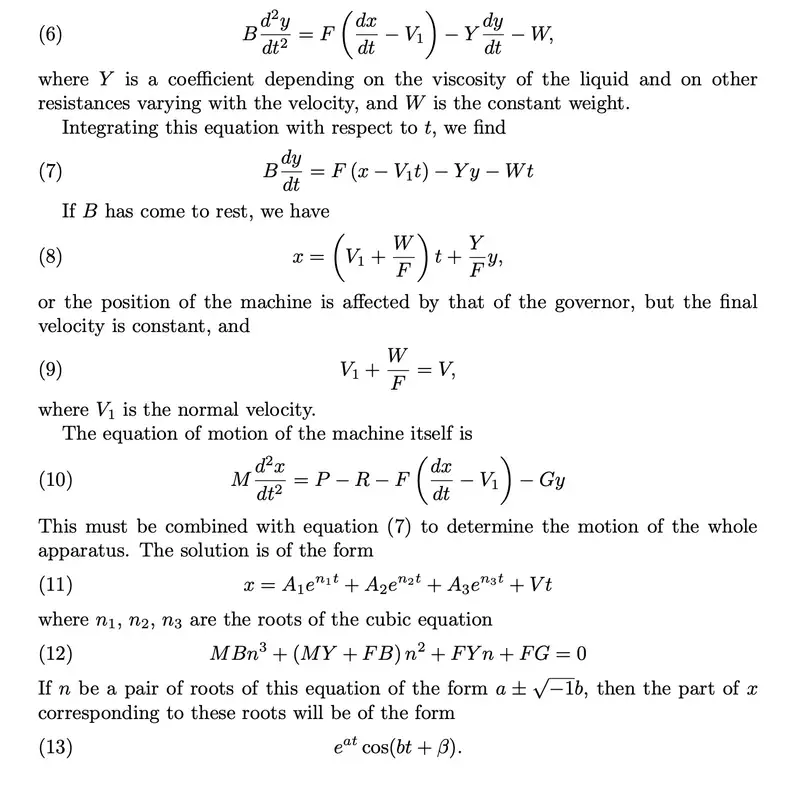

That’s where Watts stopped. But 80 years later, James Clerk Maxwell published a famous paper, “On Governors”, that describes the behavior of the Watts governor with two differential equations. The first describes the change of the arm angle (strictly, its acceleration), based on the current arm angle and engine speed. The second describes the change of the engine speed based on the current speed and the throttle setting, which, remember, depends on the arm angle.

These two equations are *coupled* because a term for engine speed and a term for arm angle appear in both. I don’t know if these equations have a closed-form solution or if you have to use approximations. In any case, you can use these equations to calculate how the system will evolve in response to perturbations of the engine speed, once you know the values of constants like friction and the relationship between arm angle and throttle setting.

≤ short music ≥

In dynamical systems theory, the emphasis is on long-term qualitative behavior. Wikipedia says quote “the focus is not on finding precise solutions to the equations defining the dynamical system (which is often hopeless [as in the case of chaotic systems]), but rather to answer questions like "Will the system settle down to a steady state in the long term, and if so, what are the possible steady states?"

A common analogy for such steady states begins with a landscape containing hills and valleys. If the system is poised at the top of a hill, not too much of a perturbation is needed to send it rolling down into the nearby valley. But once in the valley, it will stay there rather than roll back up to the top of the hill.

If you consider the landscape to be a three-dimensional graph, there are two input variables – x and y, say. The height of the landscape at a given (x,y) point is a value that the system “wants” (in scare quotes) to minimize. For a body, that value is often “energy expended”. So a valley might represent the most efficient way to make some continuous movement.

Which brings us to your fingers. Assuming you’re not listening to this episode while driving, hold your elbows at your side, with arms horizontal, hands open, thumbs on top, and your index fingers pointing forward. Now wag the two index fingers back and forth, horizontally, at the same speed.

I’ll pause to let you do that.

≤ pause ≥

Unless you’re well-trained in finger-wagging, you almost certainly adopted one of two patterns. Either the fingers will be in phase, going back and forth together like most windshield wipers do, or they will be entirely out of phase, both simultaneously moving toward the midline, getting the closest to the belly button at the same moment, sweeping outward together, reaching their farthest extent at the same time, then back again together.

You can model fingers as quote “nonlinearly coupled oscillators”. (I’m guessing people decided they’re coupled because, well, look at them: they naturally act in synchrony.) It takes energy to maintain wagging, so that gives us something to minimize.

The equation of motion for wagging fingers has a single variable, phi, which is the relative phase of the two fingers. The equation is -A cosine ɸ - B cosine 2ɸ. I do not know how they came up with that.

If you graph that equation, you get a curve with two minima. One is when the fingers are in phase, windshield-wiper style. A shallower one is at the entirely out-of-phase wagging. That describes the observed behavior: if you temporarily achieve any other relative phase, it will quickly slide into one of the two minima.

That in itself is not wildly interesting. There was some observed behavior. Some people came up with an equation that generates that behavior. Okay, but that only gets interesting if the equation can be used to predict something new and surprising. (See episode 17 on Imre Lakatos’s “methodology of scientific research programmes.”)

So what our researchers did was take the derivative of phi with respect to time. The new equation predicts that, if you’re a non-windshield-wiper wagger, it will become harder to maintain that as you speed up the wagging. Eventually, you’ll slip into windshield-wiper mode.

Moreover, “as the rate approaches [the] critical value, attempts to maintain out-of-phase performance [should] result in erratic fluctuations of relative phase”. Your finger movements get messy as you approach the transition.

This is indeed what happens.

This equation glories in the name of the “Haken-Kelso-Bunz model”. Like any scientific model, it’s subject to the “what have you done for me lately” test. What *else* can it predict?

What about leg swinging? Does that work the same way? You won’t be surprised to find that it does. More interesting is *two* people, each swinging their right leg. There is the same qualitative behavior: the people are in-phase or out-of-phase, and out-of-phase transitions to in-phase as the swinging gets faster.

The model can also be extended to better capture finger-wagging. It turns out that right-handed people are better at wagging their right index finger than their left. You can add a term to the basic equation – for the record, C sinɸ - 2D sin 2ɸ – which better matches the data for experiments.

It gets weirder. The previous examples don’t seem to have much, if anything, to do with *thinking*. But what about this?

Imagine being given a sheet of paper showing a series of interlocked gears, say four of them, connected in serial. Experimental subjects are told that the first gear is turning clockwise and asked which direction the final gear is turning. Both adults and preschoolers usually start by using their finger to go around the first gear clockwise, the second counterclockwise, the third clockwise, and the fourth counterclockwise. And so counterclockwise is the answer. After some number of trials of different numbers of gears, people catch on to just counting the gears, since odd-numbered gears always rotate in the same direction as the starting gear.

(I’ll note that, annoyingly, when I told Dawn about this, she immediately jumped right to the trick, kind of ruining the big reveal.)

There’s nothing weird here. People generalized from a few examples. Big deal.

What’s interesting is that “this ‘a-ha’ moment is preceded by a spike in system entropy. That is, the finger movements exhibit critical fluctuations, indicating that the extended system solving the problem is a nonlinearly coupled dynamical system undergoing a phase transition.”

That is, realizing the trick is somehow something like switching from out-of-phase to in-phase finger wagging. Which *is* weird: what links finger wagging to solving a reasoning problem? Hold that thought.

≤ short music ≥

I’ll give one final example, involving a different equation.

Some other scientists – call them the Speechifiers – modeled a speech-recognition task with the equation k*x - 1/2 x^2 + 1/4 x^4. Yet another set of scientists – call them the Stickifiers – wanted to investigate this question:

Suppose I show you a stick and ask you if you could reach out with it and touch some object. It’s no fair to actually try it with the stick; you have to imagine.

And now suppose you are writing a computer program to solve this problem, one that might be running in your brain. It’s easy enough. There is a data structure representing your arm. Based on long experience, you know how long that arm is, and you can look it up in a field in the Arm data structure. Your eyes show your brain a stick, and the brain can estimate its length. The answer “can you touch it?” means adding those two lengths and comparing the sum to the estimated distance to the object, also calculated from data your eye delivers.

According to that model, experimental subjects might get it wrong, but that would be solely due to mistakes in estimating lengths from visual perception. But *is* that the only source of error?

For some reason, the Stickifiers decided to apply the Speechifiers’ equation to the stick-poking problem. I *think*, but Chemero doesn’t say, that they were using the Speechifiers’ equation to model sources of error.

Importantly, in the k*x - etc. etc. Speechifier equation, the Stickifiers decided that `k` wouldn’t be a constant but rather a function of history: the history of sticks presented and the answers given for those sticks.

They made four predictions about different sources of error, and the experimental data turned out to match those predictions. I’ll only cover two related ones.

Consider being asked the question multiple times. Each time, you’re given a stick and asked the “can you touch it?” question. Then the stick is taken away and the trial is repeated with a new stick. All the sticks differ only in their length.

I’m going to say the lengths are 1, 2, 3, 4, etc. I don’t know the actual lengths, but stick 4 is longer than stick 3 and so on. In this experiment, the stick lengths increased and then decreased, like: 1, 2, 3, 4, 5, and then 4, 3, 2, 1.

In one set of trials, the increasing sequence went from 1 to 9. Let’s suppose 1, 2, 3, and 4 get a “no” answer, and stick 5 gets the first “yes”. Now suppose sticks of length 6, 7, 8, and 9 are presented. You’d predict that those would also get a “yes”, since they’re longer than 5. And they do, all the way up to the last stick on the upward slope, 9. Now what about the 8, 7, 6, etc. on the downward slope? Since stick 5 was the first “yes” on the way up, it should be the last “yes” on the way down.

However, people will tend give the first “no” too early. That is, they might start answering “no” with stick 7 rather than stick 4, even though 7 was plenty long enough the first time – and then do the same for 6, 5, 4, and all the way down to 1. The experimenters had predicted that, based on the equation that involved history. Specifically, they predicted the longer the run of “yes”es, the more likely the subject would “jump the gun” and answer “no” too soon on the way back down.

But what about a *short* run of “yes” answers? The researchers predicted that, instead of saying “yes” too little, subjects would (on average) say “yes” too much. Even after they were presented with sticks they’d said were too short on the way up, they’d say they were long enough on the way down, sort of the opposite of the earlier case.

This is weird.

≤ music ≥

What can we *do* with this information?

Chemero says (in not quite these words) that: if you were writing a computer program to solve these problems, you’d do it in some straightforward way. Your code wouldn’t produce “critical fluctuations at boundary conditions”. And you wouldn’t write code that, given a simple perception+addition problem, dragged in the history of past judgments, past sticks.

What, in our understanding of natural selection, would cause evolution to create a brain that goes to a whole lot of extra computational effort to act weirdly and inefficiently?

Therefore, Chemero says, we should abandon the notion that the brain is anything like a computer. It’s something very different.

Not everyone, you’ll be surprised to hear, agrees. As far as I can tell, there are three main objections:

1. These equations are descriptions, not explanations. *Why* is rhythmic motion described by `A cosine etc. etc.`? What we want is something more like the direct causal link between the arm angle and engine speed that you get with the Watts centrifugal governor. Put differently, how does knowing that equation help us build robots?

2. We know that evolution is somewhat slapdash. The reasoning brain could be a computer built – somewhat shakily – on earlier adaptations. Those foundations might show through in fluctuations before a phase shift, say. But that doesn’t mean the fluctuations are *essential* – they’re just the equivalent of friction that can be avoided by using better materials: silicon. We don’t have to give up on the idea of the brain-as-computer.

By analogy, a computer chip doesn’t *really* deal with 1s and 0s but with voltage levels that range continuously between zero and 3.3 volts (or whatever the maximum is for that chip). It probably even overshoots 3.3 a bit on the way up: a fluctuation. But we arrange things such that we don’t have to think about the fundamentally analog nature of our digital devices. We treat them as if they were purely digital. And so let’s do the same with the brain.

And, what I think is most important, though least explicit:

3. We *want* the brain to be a computer. Why? My theory starts with the fact that, well, we *die*. That sucks. Especially since it’s often messy, undignified, painful, and strips us of reason and language, two things that we cherish as setting us apart from animals.

Actually, it’s not just death: so much of living is messy, undignified, and painful. And because we humans love, love, *love* good/bad dichotomies, we have to have *something* that contrasts with the unpleasant parts of life, that offers a hope of some kind of release or reprieve.

A nice modern example of this need is longtermist philosopher Nick Bostrom’s “Letter from Utopia”, imagined to be written by a distant descendent of ours to us of today. The either/or, good/bad contrast is explicit. Here’s the good future:

“My mind is wide and deep. I have read all your libraries, in the blink of an eye. […] What I feel is as far beyond feelings as what I think is beyond thoughts. […] There is a beauty and joy here that you cannot fathom. It feels so good that if the sensation were translated into tears of gratitude, rivers would overflow.”

And here’s a bit of the description of today’s everyday life:

“the ever-falling soot of ordinary life […] Always and always: soot, casting its pall over glamours and revelries, despoiling your epiphany, sodding up your finest collar. And once again that familiar numbing beat of routine rolling along its familiar tracks.”

To a longtermist, the brain has *got* to be algorithmic, else how will we upload ourselves into computers and live forever in bliss?

I’m not saying that cognitive scientists are all longtermists indulging in motivated reasoning. I’m more saying that the very idea of “motivated reason” is one half of another good/bad dichotomy, reflecting the *emotionally necessary* existence of *un*motivated reasoning, reasoning that is pure and uncontaminated and somehow eternal or deathless and non-messy and unemotional.

We’ve spent literally thousands of years working out what such a kind of reason – one independent of matter – might be like. We painstakingly created formal logic; necessary-and-sufficient conditions for categories; seeing the world not directly, but via truth-bearing representations; decomposition of wholes into parts; rule-based computation; infinities; recursion; and parsing the difference between capital-T Truth and mere belief or mere argument. All of that got turned into a theory of mind sometimes called “computationalism”.

In a way, it’s a clever acrobatic trick: all that time building up a notion of reason on the imperfect base of our mortal selves, then finishing by saying that *actually* computationalism was inside us all the time. It is the essence of what we are. It’s not built on top of messiness, not to the right way of thinking – rather the messiness is inessential, a secondary phenomenon, an *epiphenomenon* as they say. What we most centrally *are* is independent of the “implementation substrate”. We are something that can be, modulo some implementation details, lifted out of bags of meat and run on something more reliable and powerful.

Again, I’m not saying that cognitive scientists are transparently motivated by a fear of meat. Rather, they and their forebears – and you and I – have been soaking in this intellectual bath for thousands of years. (Perhaps that’s why our brains are so wrinkled?) The eternal over the mortal, the transcendent over the mundane: these dichotomies are the unquestioned default.

Ecological and embodied cognition directly challenges our defaults. It makes the imperfect world and the ever-fragile body essential, not disposable. Cognition is about survival, not about Truth. Reason isn’t noble, but rather a sometimes-useful hack.

It messes up the pleasing picture.

Something that occurred to me. Time is not what you might call a “first-class object” in algorithms. It appears only as external events, which is why our uploaded selves will be able to “read libraries in the blink of an eye”: we’ll be running on a substrate with a much higher clock speed and higher-level parallelism than neurons can achieve.

But embodied cognition puts time front-and-center, as in the phase-shifting speeds in the Haken-Kelso-Bunz model. So, what if thought turns out to be time-bound: what if we can’t be *us* at a higher clock speed? How horrible if our uploaded selves could do no better than an eternity of “ever-falling soot” and “the familiar numbing beat of routine”. Surely the world could not be so unfair?

≤ music ≥

At some point in my reading, I realized that debates between EEs and computationalists always come down to definitions: what, *precisely*, is a representation? What, *precisely*, is *computation*? If two things are dynamically coupled, are they necessarily part of a single, undecomposable system, or can we understand them individually: two parts rather than one whole?

If you listened to the recent episode “Concepts without categories”, you know what I think about such definitional arguments: we should do something else. In the tradition of this podcast, the something else – next episode, if I can get my thoughts together – will be accepting Andy Clark’s ideas about how animals use representations and rules, and seeing what those ideas might suggest for the design and programming of actual systems. As a teaser: Clark’s approach, I think, to something like domain-driven design would be to center the ubiquitous language on verbs rather than nouns. Free-standing, ephemeral closures that capture relevant bits of a context rather than methods on classes.

We shall see. Until then, thank you for listening.